הטכניון יצא לחופשת פסח ויחזור לפעילות סדירה ביום ראשון 20/04. חג שמח!

מחברות סטארט-אפ ועד לקווי החזית, הבוגרים החדשים מגלמים את שילוב הערכים, העשייה והקדמה

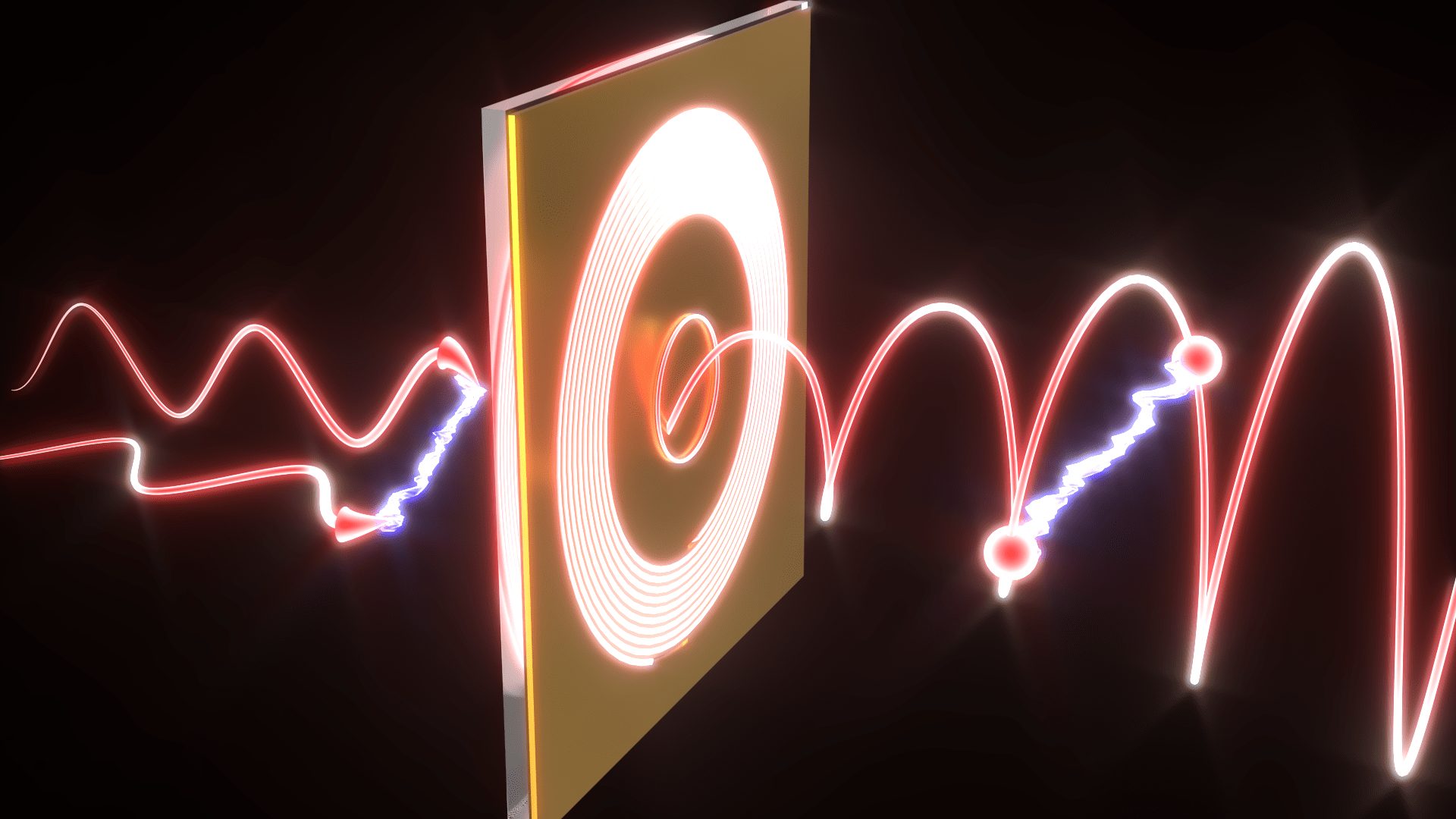

השזירות שהתגלתה מתקיימת בתנע הזוויתי הכולל של חלקיקי אור (פוטונים) הלכודים במבנים זעירים שגודלם כאלפית השערה. תגלית זו תתרום למזעור עתידי של רכיבים לתקשורת קוונטית ומחשוב קוונטי

אליס סיבק מפתח תקווה זכתה במקום הראשון באולימפיאדת הביוטכנולוגיה השמינית בטכניון

100000

בוגרים

18

פקולטות

15000

סטודנטים

60

מרכזי מחקר

ברחבי הקמפוס